In today’s rapidly evolving healthcare landscape, artificial intelligence (AI) is increasingly becoming a powerful tool for improving patient outcomes. From diagnosing diseases to streamlining administrative tasks, AI promises to revolutionize the way we approach healthcare. However, as we embrace this transformative technology, it’s crucial to ensure that AI systems uphold the core principles of human rights, ensuring that every patient is treated with fairness, dignity, and respect.

Integrating human rights principles into AI design isn’t just a matter of ethics—it’s a pathway to more equitable and effective healthcare systems. By aligning AI healthcare technologies with the values of respect, fairness, and autonomy, we can significantly improve patient outcomes while reducing disparities in care.

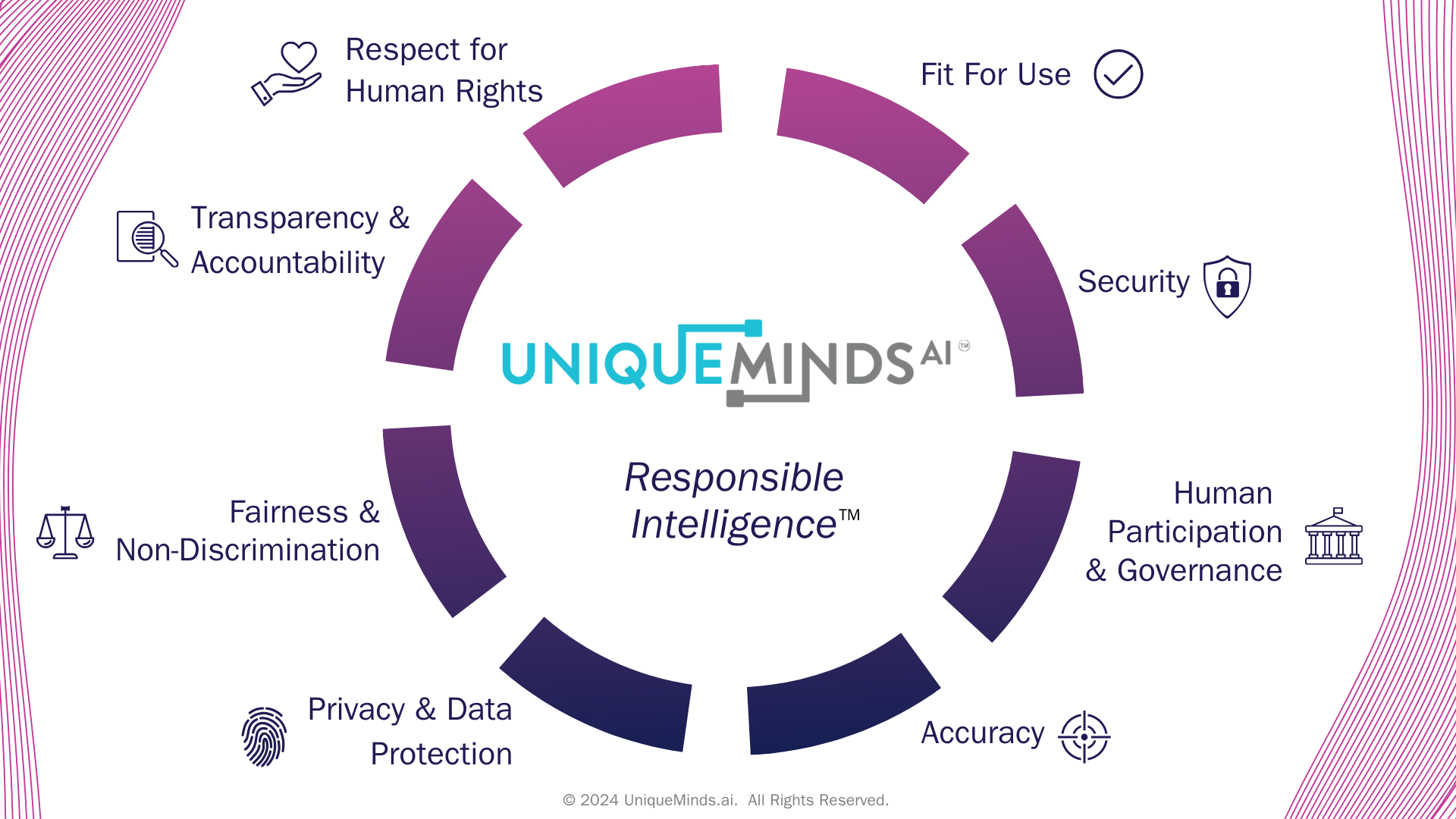

How UniqueMinds Responsible AI Framework for Healthcare Ensures Human Rights in AI Decisions

At UniqueMinds.AI, we believe that ensuring human rights are at the heart of AI systems isn’t just about addressing ethical concerns—it’s about creating AI solutions that are secure, unbiased, and preserve data privacy. To achieve this, we’ve developed the Responsible AI Framework for Healthcare (RAIFH), which is specifically designed to ensure that human rights principles are seamlessly integrated into every stage of the AI development process.

The RAIFH is structured around several core pillars, each focused on safeguarding human rights while enabling the responsible use of AI in healthcare:

- Equity and Inclusion: Our framework emphasizes the importance of diverse datasets and equitable treatment algorithms, ensuring that AI systems are designed to serve all populations fairly, including underserved communities.

- Transparency and Accountability: The RAIFH ensures that every decision made by AI systems is traceable and explainable. Through robust audit trails and clear documentation, we empower healthcare organizations to be transparent in how AI models make decisions, building trust among patients and stakeholders.

- Patient Autonomy and Consent: The RAIFH promotes patient empowerment by ensuring that AI technologies are used to augment, not replace, human judgment. Our framework places patient autonomy at the forefront, ensuring that patients are informed, their privacy is respected, and their consent is always prioritized.

- Continuous Monitoring for Bias: Our framework incorporates ongoing monitoring and model auditing to identify and eliminate any bias that may creep into AI systems. By ensuring that algorithms are continually evaluated and updated, we prevent harmful or discriminatory practices from emerging.

- Governance and Ethical Oversight: The RAIFH provides a comprehensive governance structure that helps healthcare organizations manage the ethical implications of AI. This includes ensuring compliance with global data protection standards, ethical guidelines, and regulatory requirements.

By embedding these principles into the development, deployment, and monitoring of AI systems, the RAIFH ensures that human rights remain central to every decision made by AI in healthcare. Through this framework, we can guarantee that AI-driven solutions are not only innovative and efficient but also ethical, inclusive, and respectful of all individuals.

Ensuring Equity in Healthcare Access

One of the most significant challenges in healthcare today is ensuring equitable access to care for all patients, regardless of their socioeconomic background, ethnicity, or geographical location. Traditional healthcare systems often struggle with systemic biases—whether in the allocation of resources, access to treatment, or the quality of care provided.

AI systems designed with human rights at their core can help mitigate these biases. For example, AI can analyze vast datasets to uncover health disparities, enabling healthcare organizations to make more informed decisions about resource allocation and access to care. By ensuring that AI systems are trained on diverse, representative datasets, we can reduce biases that may otherwise impact underserved populations, improving health equity and ensuring that no one is left behind.

Safeguarding Patient Dignity and Autonomy

At the heart of human rights is the principle of respecting individual dignity and autonomy. In healthcare, this means empowering patients to make informed decisions about their care, protecting their privacy, and ensuring that their voices are heard. When AI systems are built with these values in mind, they not only enhance patient care but also help preserve the human element of healthcare.

For example, AI-driven tools can assist patients by providing personalized health insights, enabling them to make better decisions about their treatment options. Additionally, AI can empower healthcare providers with real-time, data-driven insights, ensuring that their recommendations are aligned with the needs and preferences of patients, supporting patient-centered care. By placing human rights at the core of AI, we ensure that the technology supports, rather than replaces, the patient-provider relationship.

Promoting Fairness in Healthcare Outcomes

Another key aspect of human rights in healthcare is ensuring fairness in treatment and outcomes. Discrimination, whether based on race, gender, or other factors, has no place in healthcare. Unfortunately, AI systems—if not properly designed—can perpetuate or even exacerbate existing biases. For instance, biased algorithms may lead to inaccurate risk assessments or unequal treatment recommendations, undermining the trust that patients place in healthcare institutions.

However, when AI systems are intentionally designed to uphold fairness and equality, they have the potential to promote more accurate diagnoses and better outcomes for all patients. This involves continuously auditing AI models to ensure that they are free from bias and that they produce consistent, equitable results across different demographic groups. It also involves educating healthcare professionals about the ethical implications of AI and ensuring that AI tools are used responsibly and transparently.

Building Trust in Healthcare Technology

Ultimately, the success of AI in healthcare depends on trust—both from patients and healthcare providers. If patients don’t believe that AI will respect their rights or produce fair outcomes, they will be hesitant to adopt or rely on AI-driven solutions. By integrating human rights into AI systems, healthcare organizations can build trust and demonstrate their commitment to ethical practices.

Transparency plays a key role in this trust-building process. Patients should understand how their data is being used, and healthcare providers should be able to explain how AI systems arrive at their decisions. By aligning AI technologies with the principles of human rights and ethics, organizations can reassure patients that their dignity, autonomy, and privacy are protected, fostering greater acceptance of AI in healthcare.

The Path Forward: Ethical AI for Better Patient Care

As we continue to integrate AI into healthcare systems, it’s essential that human rights are not an afterthought but a foundational component of AI development. When AI systems are designed with the principles of respect, equity, fairness, and transparency in mind, they have the power to transform patient care for the better.

The promise of AI in healthcare is immense, but it is only through ethical development and a commitment to human rights that we can ensure this technology benefits all patients—especially the most vulnerable. By prioritizing human rights in AI healthcare systems, we create a future where technology and humanity work together to achieve better, more equitable health outcomes for everyone.

Andrea Gaddie Bartlett is a visionary leader with more than 20 years of experience driving digital transformation in the healthcare, life sciences, and technology industries. She is the CEO and founder of UniqueMindsAI.